AI can do way more things other than analyze data in the blink of an eye, or be the scapegoat for doomsayers. Considering how toxic the internet can get, maybe artificial intelligence can be put to work to make the web safer. But can it really do it?

A woman uses a laptop on April 3, 2019, in Abidjan. - According to the figures of the platform of the fight against cybercrime (PLCC) of the national police, nearly one hundred crooks of the internet, were arrested in 2018 in Ivory Coast, a country known for its scammers on the web, has announced on April 2, 2019 the Ivorian authority of regulation of the telephony. (Photo by ISSOUF SANOGO / AFP)

There are many reasons it can, and it all relies on just how much harmful content there is on the internet, and how AI's capabilities can deal with all of it.

All About Processing Tons Of Data

According toVentureBeat, content moderation is where the power of artificial intelligence can make a massive difference. Human content moderators already exist for the likes of social media sites, but they can only do so much (and frankly, their jobs can be quite detrimental to their mental health).

With a machine, there's no risk of the content moderator going nuts. Just take how much content there is on the internet, for example. According toLiveScience的,估计有100万艾字节content. A single exabyte is equivalent to 1 million terabytes of data. Let that sink in.

Even if you had a veritable army of content moderators looking at all of that data at the same time, 24/7-365, you would not be able to sift all through it-let alone moderate and see which ones are bad and which ones aren't. Once again, that's where the processing power ofartificial intelligencecomes in.

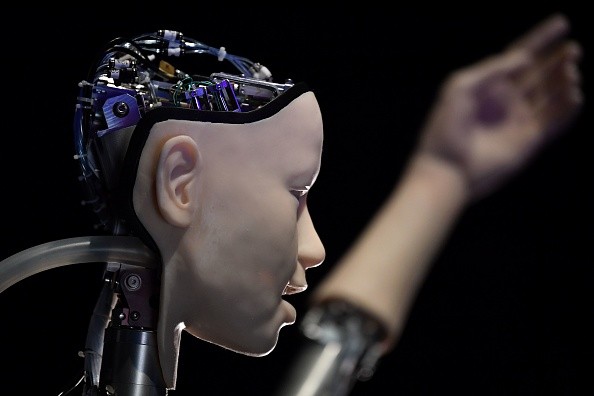

An AI robot with a humanistic face, entitled Alter 3: Offloaded Agency, is pictured during a photocall to promote the forthcoming exhibition entitled "AI: More than Human", at the Barbican Centre in London on May 15, 2019. - Managing the health of the planet, fighting against discrimination, innovating in the arts: the fields in which artificial intelligence (AI) can help humanity are innumerable.

While humans can adapt to sudden changes quicker than machines, they can only truly focus on one task at a time. This makes them spend more time solving a single problem. But compare that to the "focus" of an AI, and there is no competition. A good example would be how the AI DeepMind was able to predict 350,000 protein structures in mere minutes, while human scientists can only deal with a single structure for months.

Once the AI is fed with enough data, it can now move on to the next phase of making a safer internet: categorizing all of the content.

Read Also:Phenom AI Receives Artificial Intelligence Excellence Award for 2nd Consecutive Year

Identifying And Categorizing Content

Artificial intelligence algorithms are already employed by numerous companies to scrub their content clean. Great examples include social media sites like Twitter, for instance.Twitter uses machine learningto help it identify and even remove terrorist propaganda. Aside from that, the algorithm also manages to flag any tweet which violates the platform's terms of service.

But despite the apparent effectiveness of using these algorithms for online safety, they're not without faults. There are several times when the AI would mistakenly label safe content as "unsafe," or even outright fail to flag harmful content which could have prevented tragedies from happening in the first place.

What About Cybersecurity?

Online safety is nothing without tackling cybersecurity. So many people have bared their personal lives on the internet, with all of their information ripe for the taking. Anybody with ill intent can use that information to commit crime or almost anything else.

As to whether AI can help with improving cybersecurity, the answer is yes. Numerous tech companies are already working on it, including Big Tech giants such as Microsoft and IBM (viaInteresting Engineering). Microsoft's Windows Defender, for instance, actually uses artificial intelligence to detect and protect against various security threats.

FBI and CSA released a joint advisory regarding the latest vulnerability exploitation carried out by Russian hackers to the NGO.

Maintaining online safety is all about keeping abreast with the times. Cybercriminals always try to vary their methods and mislead authorities away from their deeds. Artificial intelligence can keep up with the rapid evolution of cyber threats and perhaps stop them before they even happen, courtesy of things like predicting the risk of data breaches (viaComputer.org).

Related Article:AI Will NOT Take Over The World And Drive Humanity To Extinction--Here's Why

This article is owned by Tech Times

Written by RJ Pierce